Implications of AI for Systems Integration

Trusting Artificial Intelligence (AI)

A judge in the US recently threw out a lawsuit and fined the plaintiff’s lawyers $5,000 when they admitted to using ChatGPT to research supporting case law. The AI tool had simply made up all the cases based on information it had trawled from the internet. It seems it was unable to understand the difference between real and fake and gave the users what it thought they wanted.

The AI tool had access to all the case data published on the web and was able to invent cases based on real judgements. The data was based on true life but if fake cases can be so easily created, how much will we be able to trust the results in the future? The imaginary cases will remain on the internet waiting for the next AI research tool to find them and use them to bolster a spurious legal argument.

AI and the bottom line

It seems that everyone is talking about AI. Organisations all over the world are embracing the new technology – sometimes with real purpose and sometimes because they feel they should. Accenture reports that in 2021, companies mentioning the use of AI in their fiscal reporting were 40% more likely to see their share price increase.

It feels like if you aren’t talking about AI then your business strategy is missing a major component. It is an opportunity to deliver a competitive edge via real applications though sometimes it seems to be a solution searching for a requirement.

The systems integration industry reflects this trend with offers of ways to speed up your systems delivery – minutes not weeks – using AI as the beating heart of the next generation of integration software.

Clearly, any reduction in time and effort is worth looking at, particularly when moving data around, but it is important to understand whether AI really is the answer to your problem and what conditions it needs to make it cost-effective.

‘If the data used to train an AI model is inaccurate, incomplete, inconsistent, or biased, the model’s predictions and decisions will be too. High-quality data results in AI systems being able to make more accurate predictions, provide relevant recommendations, and effectively automate processes.’

Gary Drenik, Forbes 2023

Eliminating bad data with effective integration

AI relies on accurate data. It trawls datasets and the internet looking for answers to questions and for statistics to back up its decision-making. Therefore, the quality of that data has become ever more vital to the AI engines that rely on it. As with the lawyers in the US, there are many stories of AI delivering results that are inaccurate, misleading or completely fictional. The reason for this is often inaccurate, out of date, or invented data.

Results from AI tools can be unreliable if the data they are crunching is incomplete, out of date or downright false. The tools rely on pools of information they can mash together to produce results. This information is frequently sourced from the internet. This is a huge dataset but, unfortunately, the internet is a mass of real facts interwoven with lies. The problem AI needs to address is how to sift out the fake and leave the true.

Even within organisations, it is often the case that data is badly structured, inaccurate or incomplete. Even the best AI tool will come up with spurious results if it only has poor-quality data to work with.

When using AI tools within an organisation, it is fundamental to be able to understand, and rely on, all your data sources. Even if the tools are just trawling your own databases, you need to ensure that they are up to date and accurate. AI tools can perform rapid analyses of high-volume data millions of times faster than humans can. To ensure accurate results you need to be clear that the data is clean.

Data Architecture

Robust data architecture practice across the organisation will help. There are even AI tools that can help to map out your data and describe their use and meaning. Knowing and understanding your data is the first step for delivering fast, accurate decisions based on the latest technology.

Integration Solutions

Robust integration methods are now even more essential to ensure there is clean, accurate, complete and timely data available for your organisation – and AI tools – to use. The methods for getting information in and out of your data lakes and warehouses has become critical to effective data management. Whatever tools you use to deliver data, it needs to be accessible and searchable so AI engines can analyse it to solve problems.

Integration software connects complex functions together. It should be simple to understand, easy to change, and accurate. Whether you build it in house or use a Saas or IPaaS provider, it needs to deliver robust and reliable solutions that keep your AI and other systems running smoothly.

Managing change across the data

Systems landscapes change all the time and this means that integration paths need to change too.

Managing integration change needs to be simple, well governed and compliant. This will help ensure that the AI tools analysing your data can be trusted to deliver competitive decisions rather than gobbledegook.

Testing Accuracy

Accuracy is vital to decision-making and AI solution providers are working hard to improve the accuracy of their products. Poor data quality can be disastrous, delivering results that can’t be trusted.

A lot of data that would be relevant to the questions AI tools are asked to solve is hidden. This can lead to skewed results that don’t reflect the real world. These inaccurate results can lead other AI tools to bend further and further from the truth. Decisions made based on these false results can have huge implications for organisations, societies and the world.

AI tools are measured for accuracy based on a range of metrics. Delivering consistently high accuracy is difficult due to data quality and bias. The questions AI is asked to address are usually complex and there may be human interventions made that send the tool off in an inappropriate direction.

As the technology advances, new solutions to test the results of searches and decision-making are being delivered. Machine learning will help ensure that the output will be more reliable as time goes on. At the moment, however, it pays to be cautious and to ensure that the factors under your control – data quality, data control, configuration options, and architecture – are as clean as possible.

Using tried and tested tools

Moving your data around using effective integration methods has never been more important. It pays to work with partners who can ensure your data is delivered accurately and on time.

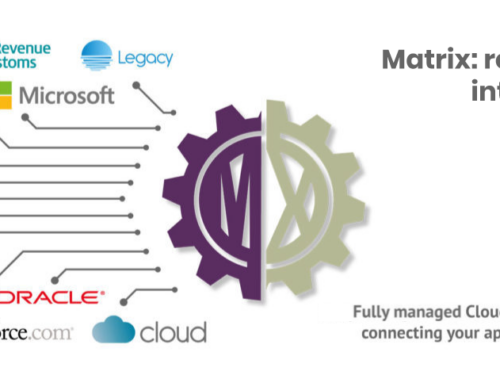

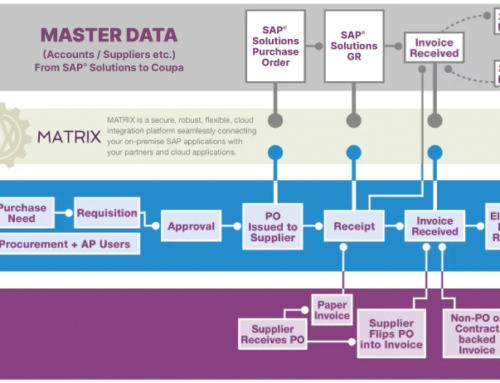

Mandant have deep experience of delivering integration solutions for Coupa BSM and SAP ERP implementations. Their cloud-based integration engine, MATRIX, is designed to deliver pre-built business scenario solutions to connect Coupa to existing landscapes at speed. The modules are already live, in use by other clients, and fully tested. Connecting to existing modules is fast and safe.

Mandant also deliver solutions for other systems landscapes as well as Coupa and SAP. If there is a knotty integration problem to solve, Mandant have the answers. Where integration simplification is the goal, Mandant can leverage their years of experience to find the solution.

Contact us

Mandant have 25 years’ experience of delivering expert integration solutions to satisfied clients. Find out today how they can help you manage difficult change.

Find out more about how Mandant can solve your integration challenges and help deliver your Coupa, SAP or integration project. Visit www.mandant.net or email info@mandant.net

Mandant eBooks

Unlocking your SAP integration challenges

Cloud migration – 8 barriers to moving SAP® solutions to the cloud.

Acknowledgements:

- Photo by Steve Johnson on Unsplash

- Photo by Anne Nygård on Unsplash

- Photo by Markus Spiske on Unsplash